Once upon a time, LLMs had a problem that made it nearly impossible to depend on output in production environments. The problem was, it was unreliable to convert unstructured data into structured output. What does this mean? It means, if you fed an LLM raw or mixed text, containing information and asked it to produce a CSV output representing the information input, you would get unreliable results every query you make. Sometimes, the LLM might miss-spell field names or omit them entirely.

OpenAI has since released support for Structured Outputs which claims to guarantee consistency for structured outputs meaning the model will generate responses that conform to provided JSON Schema.

Which Models are the best for Structured Output?

Before OpenAI released support for Structured Outputs, there were a few alternatives, models and open source tools you can combine to achieve similar results. Developers tried using LLMs like Llama 3 8b Instruct and Hermes 2 Theta but in production environment, these would probably fail at some point and that would be bad for user experience.

OpenAI , Claude doesnt work well too ?

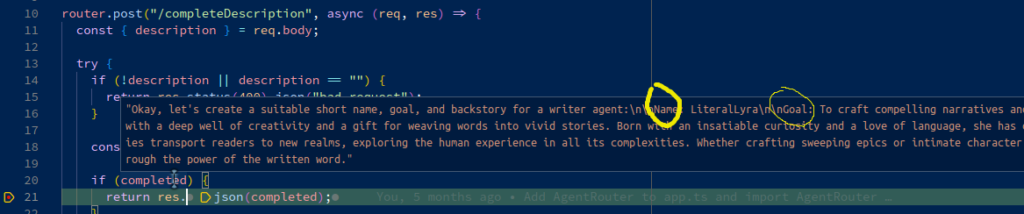

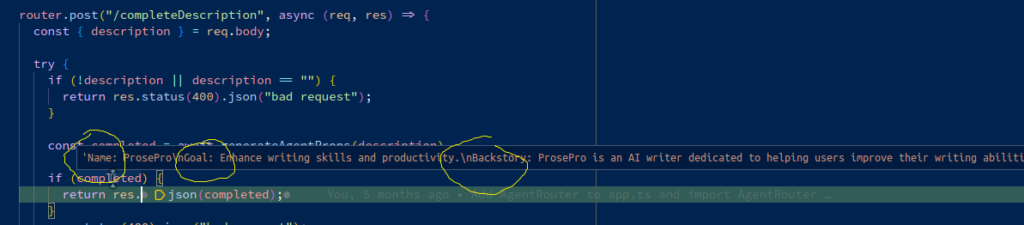

I have a problem I have to use Structured Outputs to fix. On Tohju.com, user can suggest or generate Agent descriptions when creating an agent. So, a user types in a short description and Tohju suggests a name, goal and backstory for the Agent.

Its currently breaking. It should return new line separated values which I parse into the name, goal and backstory field.

This is the output when using Claude. OpenAI handles the problem better.

Here is the prompt I used.

On Tohju, users can choose a model before sending a query, so having unreliable outputs will harm user experience.

How to use Structured Outputs with Langchain for different models.

Different models have found a way to implement Structured Outputs. Common strategies include, Prompting, Tool calling, Function calling, JSON mode. My problem is, I need a common interface for all models that produces the same result with the same input parameters. This is where Langchain’s Structured Output feature comes in.

The current code looks like this.

def complete_agent_description(task: str):

"""

Given the description of an Agents expertise, get the goal and backstory for an agent.

"""

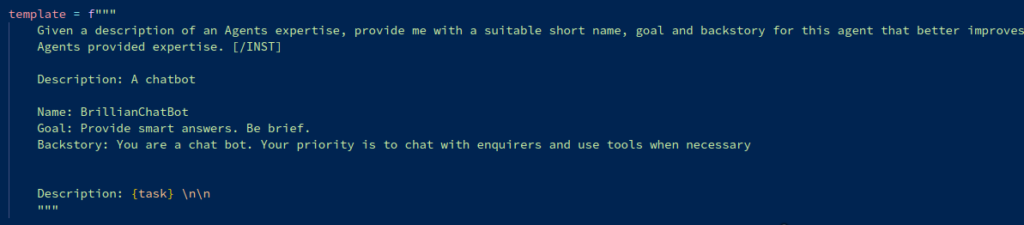

template = f"""

Given a description of an Agents expertise, provide me with a suitable short name, goal and backstory for this agent that better improves the Agents provided expertise. [/INST]

Description: A chatbot

Name: BrillianChatBot

Goal: Provide smart answers. Be brief.

Backstory: You are a chat bot. Your priority is to chat with enquirers and use tools when necessary

Description: {task} \n\n

"""

# print(template)

llm = current_basic_model()

qa = ConversationChain(llm=llm)

response = qa.invoke(input=template)

return response["response"]Its got a bit of prompting from Mixtral models. Lets just ignore that.

First, Ill import the modules needed at the top of my file.

from langchain_core.pydantic_v1 import BaseModel, FieldNext, define my model.

class CreateAgentSuggestion(BaseModel):

name: str = Field(description="Short name for the Agent. No spaces. eg. BrillianChatBot")

goal: str = Field(description="A goal for the agent. eg. Provide smart answers. Be brief")

backstory: str = Field(description="A backstory for the agent. eg. You are a chat bot. Your priority is to chat with enquirers and use tools when necessary")Finally, I can modify the llm instance to

def complete_agent_description(task: str):

"""

Given the description of an Agents expertise, get the goal and backstory for an agent.

"""

template = f"""

Given a description of an Agents expertise, provide me with a suitable short name, goal and backstory for this agent that better improves the Agents provided expertise.

Description: {task} \n\n

"""

template = f"""

Description: {task} \n\n

"""

# print(template)

llm = current_basic_model() #function that specifies current chat model

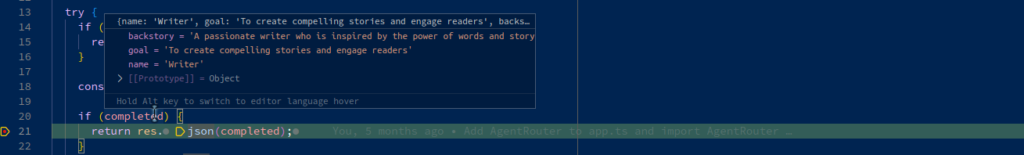

response = llm.with_structured_output(schema=CreateAgentSuggestion).invoke(template)

return response Run the code.

I got success. ✅

{

"name": "writer",

"goal": "to create engaging and impactful stories",

"backstory": "Born with a vivid imagination, this writer has the ability to weave words into captivating narratives that resonate with readers."

}Conclusion

I did face issues running the code from the Langchain website examples. After a bit of hacking, I got it figured out.

Instead of just getting a free-form string response, the application can expect a JSON object with a predefined structure.

Overall, the introduction of Structured Outputs in the Langchain API is a significant enhancement that can improve the reliability, efficiency, and quality of production systems built using the API, especially for applications that require structured data outputs.